Chapter 6 Architecture and Equilibra 结构和平衡 刘瑞华罗雪梅 导师:曾平

Architecture and Equilibra 结构和平衡 刘瑞华 罗雪梅 导师:曾平 Chapter 6

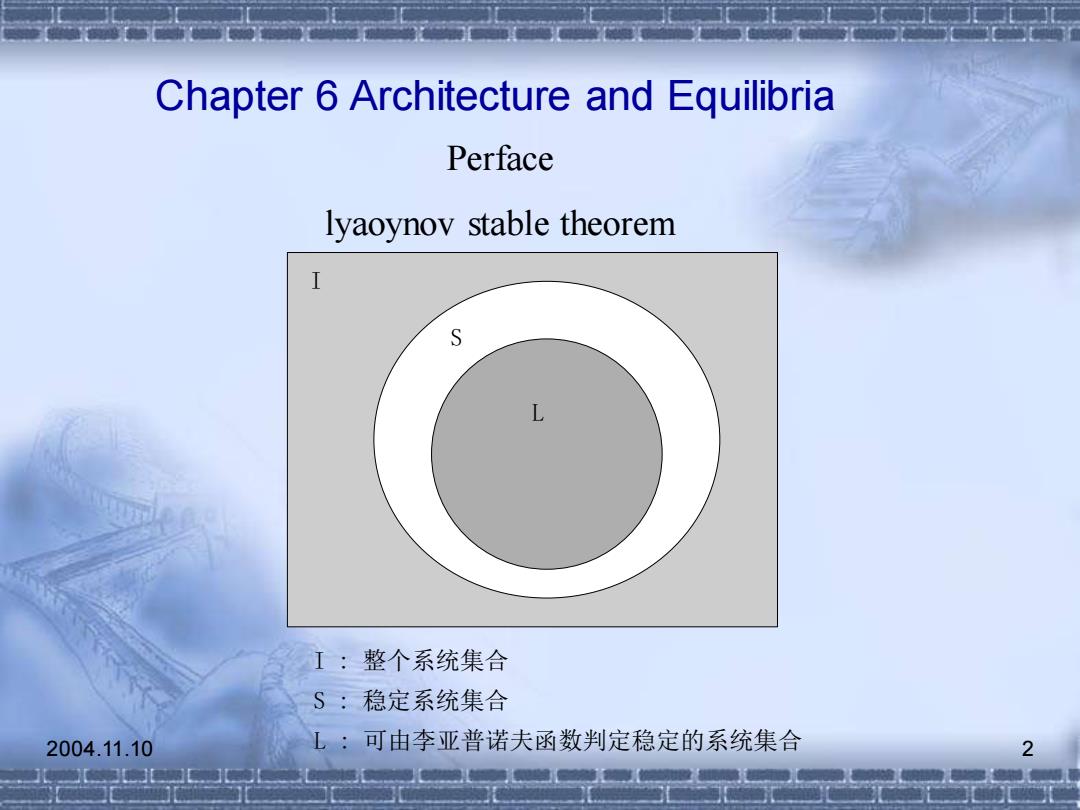

Chapter 6 Architecture and Equilibria Perface lyaoynov stable theorem S L I:整个系统集合 S:稳定系统集合 2004.11.10 L:可由李亚普诺夫函数判定稳定的系统集合 2

2004.11.10 2 Chapter 6 Architecture and Equilibria Perface lyaoynov stable theorem I I S L I : 整个系统集合 S : 稳定系统集合 L : 可由李亚普诺夫函数判定稳定的系统集合

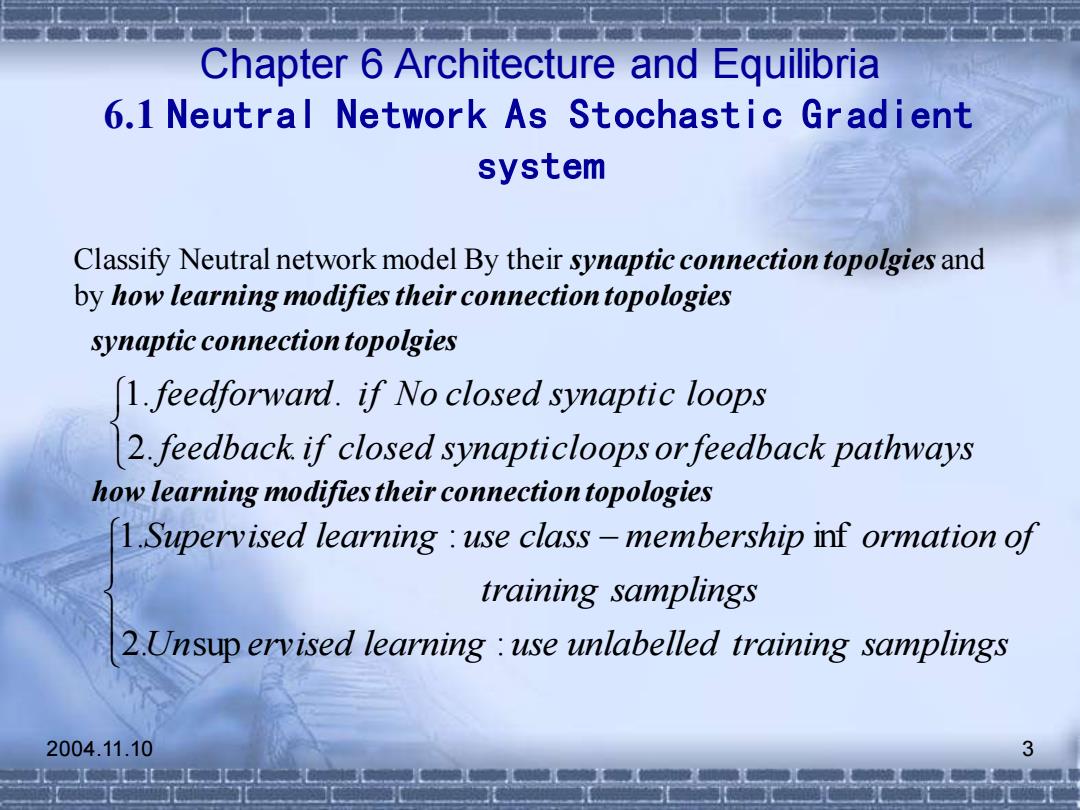

Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Classify Neutral network model By their synaptic connection topolgies and by how learning modifies their connection topologies synaptic connection topolgies 1.feedforward.if No closed synaptic loops 2.feedback if closed synapticloops orfeedback pathways how learning modifies their connection topologies 1.Supervised learning use class-membership inf ormation of training samplings 2.Unsup ervised learning use unlabelled training samplings 2004.11.10 3

2004.11.10 3 Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Classify Neutral network model By their synaptic connection topolgies and by how learning modifies their connection topologies feedback i f closed synapticloops orfeedback pathways feedforward i f No closed synaptic loops 2. . 1. . − Un ervised learning use unlabelled training samplings training samplings Supervised learning use class membership ormation of 2. sup : 1. : inf synaptic connection topolgies how learning modifies their connection topologies

Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Decode Feedforward Feedback s三C刀 Gradiedescent LMS Recurrent BackPropagation BackPropagation =t可 Reinforcement Learing Vetor Quantization RABAM Broenian annealing Self-Organization Maps ABAM Competitve learning ART-2 Counter-propagation BAM-Cohen-Grossberg Model Hopfield circuit Brain-state-In Box Adaptive-Resonance ART-1 ART-2 2004.11.10 Neural NetWork Taxonomy

2004.11.10 4 Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Gradiedescent LMS BackPropagation Reinforcement Learing Recurrent BackPropagation Vetor Quantization Self-Organization Maps Competitve learning Counter-propagation RABAM Broenian annealing ABAM ART-2 BAM-Cohen-Grossberg Model Hopfield circuit Brain-state-In_Box Adaptive-Resonance ART-1 ART-2 Feedforward Feedback Decode d e s i v r e p u S d e s i v r e p u s n U e d o c n E Neural NetWork Taxonomy

Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Three stochastic gradient systems represent the three main categories: 1)Feedforward supervised neural networks trained with the backpropagation(BP)algorithm. 2)Feedforward unsupervised competitive learning or adaptive vector quantization(AVQ)networks 3)Feedback unsupervised random adaptive bidirectional associative memory(RABAM)networks. 2004.11.10 5

2004.11.10 5 Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system ▪ Three stochastic gradient systems represent the three main categories: 1)Feedforward supervised neural networks trained with the backpropagation(BP)algorithm. 2)Feedforward unsupervised competitive learning or adaptive vector quantization(AVQ)networks. 3)Feedback unsupervised random adaptive bidirectional associative memory(RABAM)networks

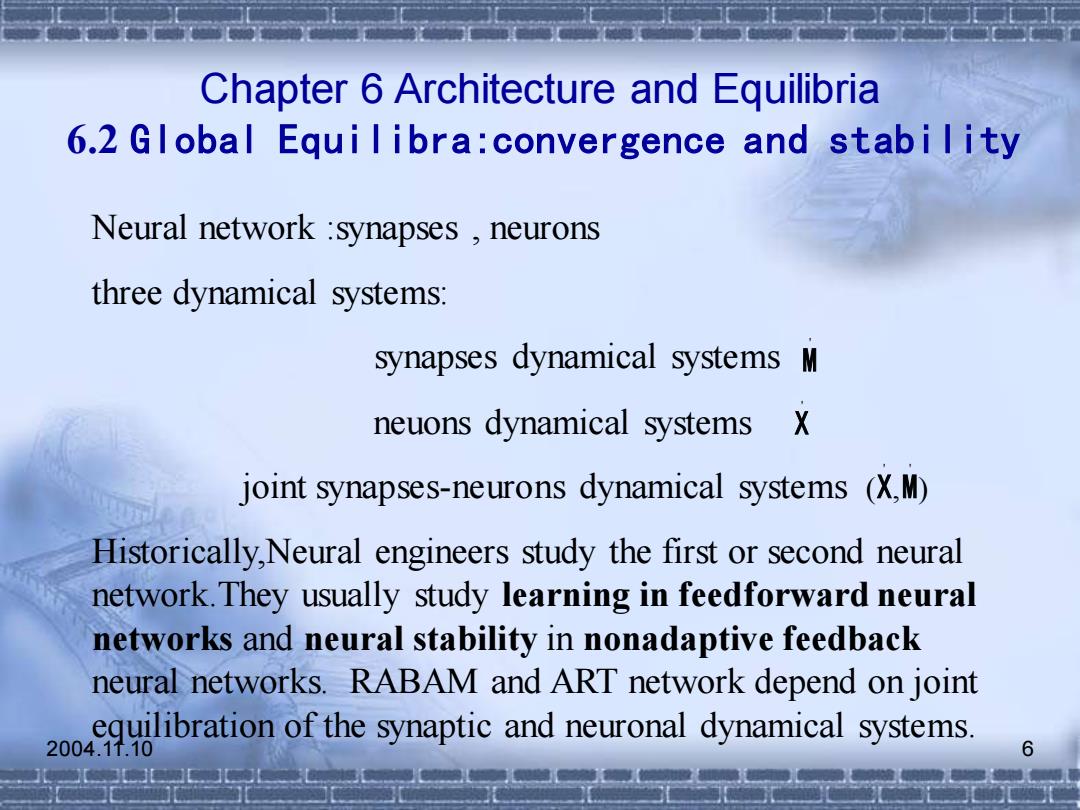

Chapter 6 Architecture and Equilibria 6.2 Global Equi libra:convergence and stability Neural network synapses neurons three dynamical systems: synapses dynamical systems M neuons dynamical systems X joint synapses-neurons dynamical systems (X,M) Historically,Neural engineers study the first or second neural network.They usually study learning in feedforward neural networks and neural stability in nonadaptive feedback neural networks.RABAM and ART network depend on joint equilibration of the synaptic and neuronal dynamical systems. 2004.11.10 6

2004.11.10 6 Chapter 6 Architecture and Equilibria 6.2 Global Equilibra:convergence and stability Neural network :synapses , neurons three dynamical systems: synapses dynamical systems neuons dynamical systems joint synapses-neurons dynamical systems Historically,Neural engineers study the first or second neural network.They usually study learning in feedforward neural networks and neural stability in nonadaptive feedback neural networks. RABAM and ART network depend on joint equilibration of the synaptic and neuronal dynamical systems. ' M ' X ( , ) ' ' X M

Chapter 6 Architecture and Equilibria 6.2 Global Equi libra:convergence and stability Equilibrium is steady state Convergence is synaptic equilibrium.M=0 6.1 Stability is neuronal equilibrium. X=0 6.2 More generally neural signals reach steady state even though the activations still change.We denote steady state in the neuronal field F =0 6.3 Neuron fluctuate faster than synapses fluctuate Stability-Convergence dilemma The synapsed slowly encode these neural patterns being learned;but when the synapsed change ,this tends 2004d ihdo the stable neuronal patterns

2004.11.10 7 Chapter 6 Architecture and Equilibria 6.2 Global Equilibra:convergence and stability Equilibrium is steady state . Convergence is synaptic equilibrium. Stability is neuronal equilibrium. More generally neural signals reach steady state even though the activations still change.We denote steady state in the neuronal field Neuron fluctuate faster than synapses fluctuate. Stability - Convergence dilemma : The synapsed slowly encode these neural patterns being learned; but when the synapsed change ,this tends to undo the stable neuronal patterns. M = 0 6.1 • X = 0 6.2 • Fx Fx = 0 6.3 •

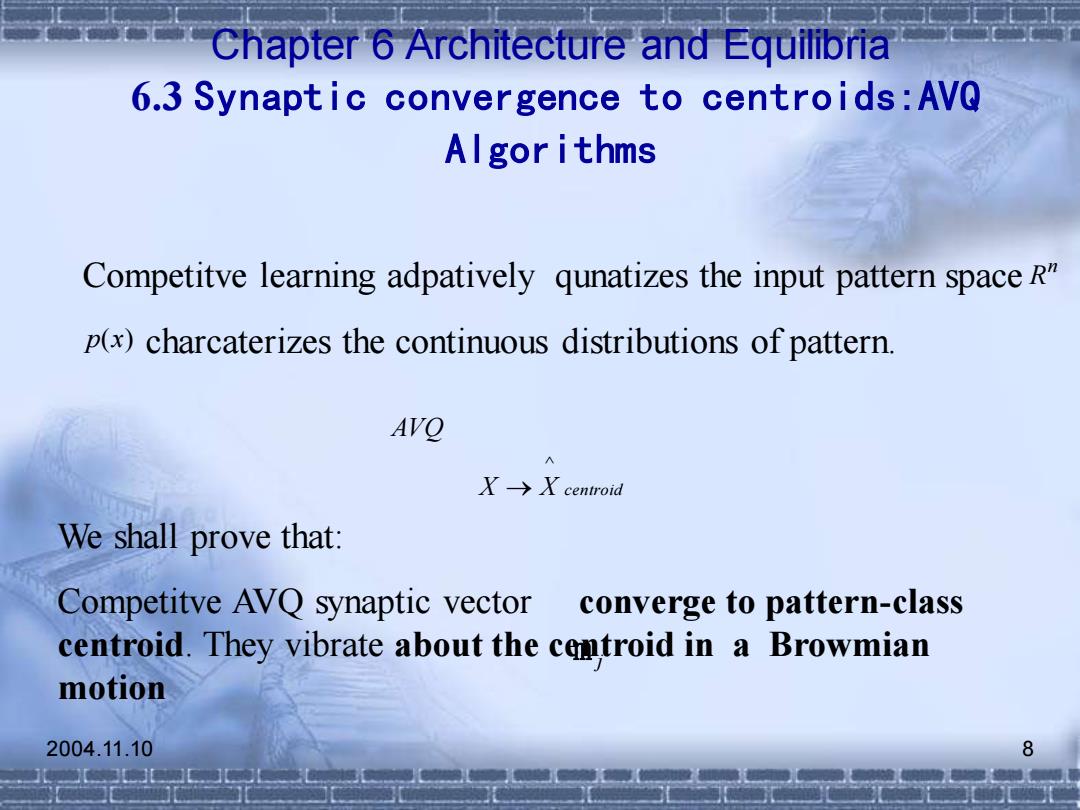

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor ithms Competitve learning adpatively qunatizes the input pattern space R" p(x)charcaterizes the continuous distributions of pattern AVO X→X centroid We shall prove that: Competitve AVQ synaptic vector converge to pattern-class centroid.They vibrate about the cemtroid in a Browmian motion 2004.11.10 8

2004.11.10 8 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algorithms We shall prove that: Competitve AVQ synaptic vector converge to pattern-class centroid. They vibrate about the centroid in a Browmian motion m j Competitve learning adpatively qunatizes the input pattern space charcaterizes the continuous distributions of pattern. n R p(x) X X centroid AVQ ^ →

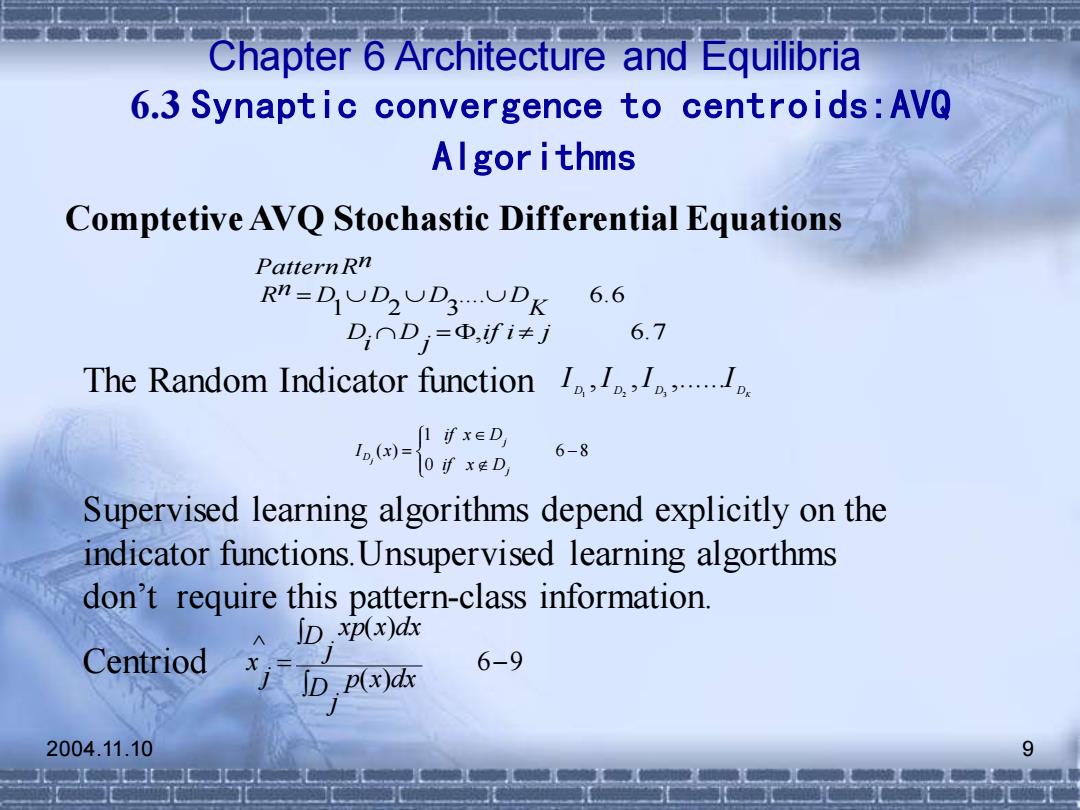

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algor ithms Comptetive AVQ Stochastic Differential Equations Pattern Rn R”=DUD2UD3UDK 6.6 D,∩Dj=Φ,fi≠j 6.7 The Random Indicator function... [1fx∈D Io()0if xeD 6-8 Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorthms don't require this pattern-class information. ID.xp(x)dx Centriod 6-9 ID.p(x)dx 2004.11.10 9

2004.11.10 9 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algorithms , 6.7 .... 6.6 1 2 3 if i j j D i D K Rn D D D D PatternRn = = The Random Indicator function Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorthms don’t require this pattern-class information. Centriod D D D DK I ,I ,I ,......I 1 2 3 6 8 0 1 ( ) − = j j D if x D if x D I x j 6 9 ( ) ( ) ^ − = j D p x dx j D xp x dx j x Comptetive AVQ Stochastic Differential Equations

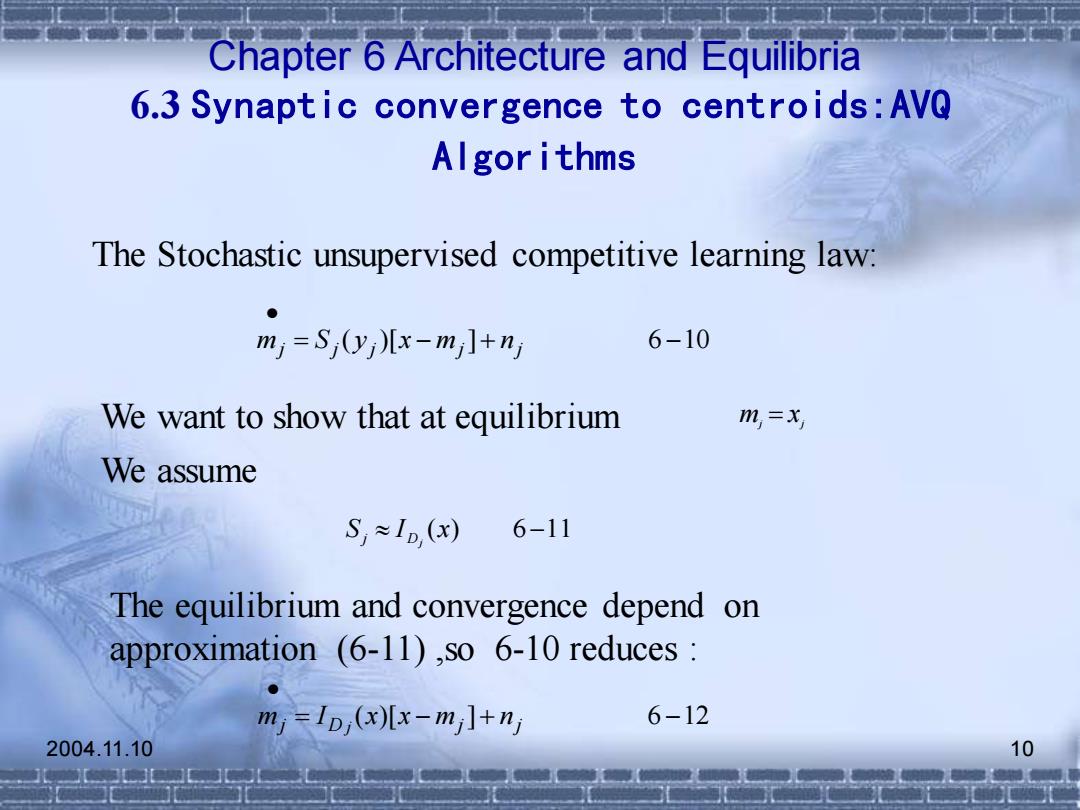

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor ithms The Stochastic unsupervised competitive learning law: ● m)=S0yj儿x-m]+nj 6-10 We want to show that at equilibrium m=x We assume S,≈I,(x) 6-11 The equilibrium and convergence depend on approximation (6-11),so 6-10 reduces m=1D,(x)[x-m]+n 6-12 2004.11.10 10

2004.11.10 10 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algorithms The Stochastic unsupervised competitive learning law: = ( )[ − ]+ 6 −10 • j j j mj nj m S y x We want to show that at equilibrium mj = xj S I (x) 6−11 Dj j We assume The equilibrium and convergence depend on approximation (6-11) ,so 6-10 reduces : = ( )[ − ]+ 6 −12 • j D mj nj m I x x j