Statistical Learning Theory and Applications Lecture 8 Data Representation-Parametric Model Instructor:Quan Wen SCSE@UESTC Fal,2021

Statistical Learning Theory and Applications Lecture 8 Data Representation - Parametric Model Instructor: Quan Wen SCSE@UESTC Fall, 2021

Outline (Level 1) Probability Density Estimation 2 Maximum Likelihood Estimation MAP estimation 4 Bayesian estimation 5 Expectation maximization 1/88

Outline (Level 1) 1 Probability Density Estimation 2 Maximum Likelihood Estimation 3 MAP estimation 4 Bayesian estimation 5 Expectation maximization 1 / 88

Outline (Level 1) Probability Density Estimation 2 Maximum Likelihood Estimation MAP estimation Bavesian estimation Expectation maximization 2/88

Outline (Level 1) 1 Probability Density Estimation 2 Maximum Likelihood Estimation 3 MAP estimation 4 Bayesian estimation 5 Expectation maximization 2 / 88

1.Probability Density Estimation Basic Concept 1 Density estimation:estimating the probability density function p(x)based on a given set of training samples D={x1,x2,...,xN}. 2 Estimated density:denoted by p(x). 3 Training samples are i.i.d.and distributed according to p(x). 4 Parametric estimation:parameter vector 0 ofp(x;0) 5 Non-parametric estimation:a function p:X->R 6 Finite number of training samples meaning that there will be some errors in the function(density)estimation. 3/88

1. Probability Density Estimation Basic Concept 1 Density estimation: estimating the probability density function p(x) based on a given set of training samples D = {x1, x2, ..., xN}. 2 Estimated density: denoted by pˆ(x). 3 Training samples are i.i.d. and distributed according to p(x). 4 Parametric estimation: parameter vector θ of p(x; θ) 5 Non-parametric estimation: a function p : X → R 6 Finite number of training samples meaning that there will be some errors in the function (density) estimation. 3 / 88

The parametric model probability estimation has a known global distribution form,i.e.function form. But in fact,we know nothing about distribution. The non-parametric model can be applied to any case of probability distribution without assuming that the form of probability distribution is known. 4/88

▶ The parametric model probability estimation has a known global distribution form, i.e. function form. • But in fact, we know nothing about distribution. ▶ The non-parametric model can be applied to any case of probability distribution without assuming that the form of probability distribution is known. 4 / 88

Outline (Level 1) Probability Density Estimation 2】 Maximum Likelihood Estimation MAP estimation Bavesian estimation Expectation maximization 5/88

Outline (Level 1) 1 Probability Density Estimation 2 Maximum Likelihood Estimation 3 MAP estimation 4 Bayesian estimation 5 Expectation maximization 5 / 88

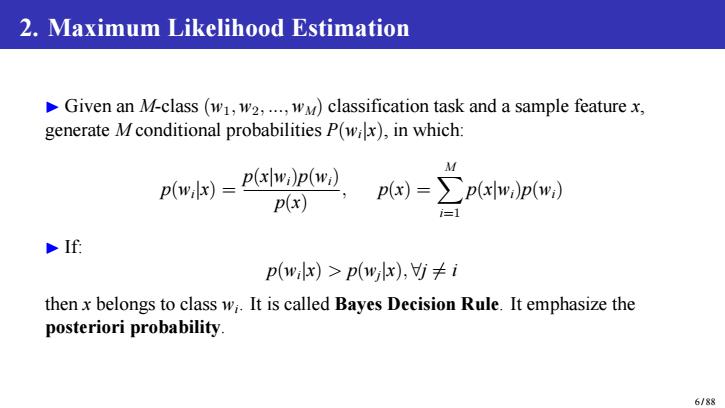

2.Maximum Likelihood Estimation Given an M-class (w1,w2,...,w)classification task and a sample feature x, generate Mconditional probabilities P(wix),in which: M pe-pig. p(x) p)=∑ph,p) If p(wx)>p(wx),≠i thenx belongs to class wi.It is called Bayes Decision Rule.It emphasize the posteriori probability. 6/88

2. Maximum Likelihood Estimation ▶ Given an M-class (w1,w2, ..., wM) classification task and a sample feature x, generate M conditional probabilities P(wi |x), in which: p(wi |x) = p(x|wi)p(wi) p(x) , p(x) = X M i=1 p(x|wi)p(wi) ▶ If: p(wi |x) > p(wj |x), ∀j ̸= i then x belongs to class wi . It is called Bayes Decision Rule. It emphasize the posteriori probability. 6 / 88

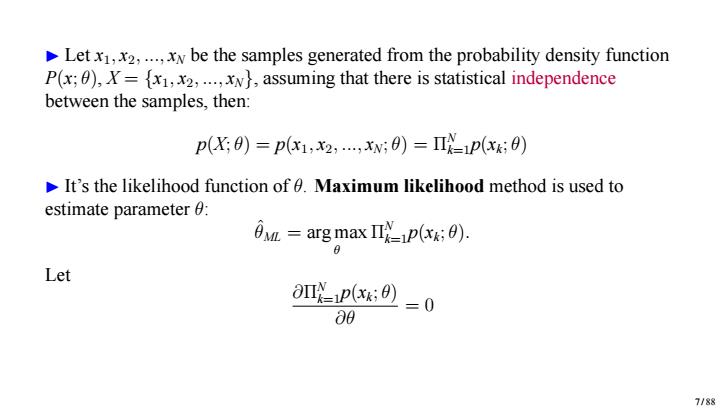

Let x1,x2,...,xw be the samples generated from the probability density function P(x;),={x1,x2,...,xN},assuming that there is statistical independence between the samples,then: p(X:0)=p(x1,x2,...,xN;0)=IINp(xki 0) It's the likelihood function of 0.Maximum likelihood method is used to estimate parameter 6: 0M=argmaxΠ%=pk;), Let ∂Πgpx:0=0 08 7/88

▶ Let x1, x2, ..., xN be the samples generated from the probability density function P(x; θ), X = {x1, x2, ..., xN}, assuming that there is statistical independence between the samples, then: p(X; θ) = p(x1, x2, ..., xN; θ) = ΠN k=1p(xk ; θ) ▶ It’s the likelihood function of θ. Maximum likelihood method is used to estimate parameter θ: ˆθML = arg max θ Π N k=1p(xk ; θ). Let ∂ΠN k=1p(xk ; θ) ∂θ = 0 7 / 88

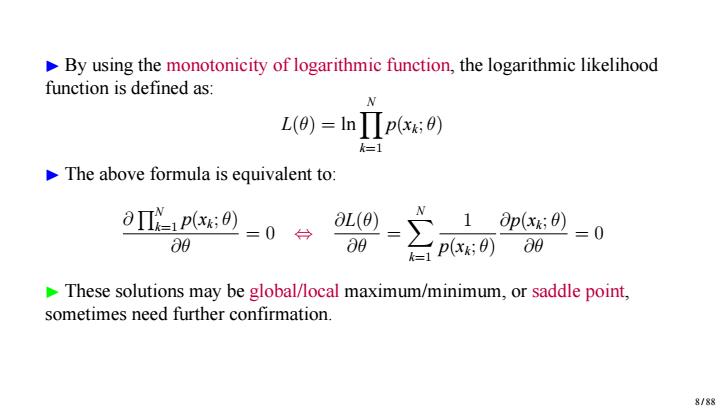

By using the monotonicity of logarithmic function,the logarithmic likelihood function is defined as: N L(0)Inp(xx;0) k=1 The above formula is equivalent to: ∂Π1p(x;) 9-p0 1 pxk:0)=0 00 08 These solutions may be global/local maximum/minimum,or saddle point, sometimes need further confirmation. 8/88

▶ By using the monotonicity of logarithmic function, the logarithmic likelihood function is defined as: L(θ) = lnY N k=1 p(xk ; θ) ▶ The above formula is equivalent to: ∂ QN k=1 p(xk ; θ) ∂θ = 0 ⇔ ∂L(θ) ∂θ = X N k=1 1 p(xk ; θ) ∂p(xk ; θ) ∂θ = 0 ▶ These solutions may be global/local maximum/minimum, or saddle point, sometimes need further confirmation. 8 / 88

Outline (Level 2) 2 Maximum Likelihood Estimation o Example 1:Normal Distribution:unknown mean u and variance o2 Unbiased biased estimates of u&2 What is the global optimum of Gauss distribution ML? Exponential famil小y o Example 2:Normal Distribution:known covariance matrix and unknown mean u 9/88

Outline (Level 2) 2 Maximum Likelihood Estimation Example 1: Normal Distribution: unknown mean µ and variance σ 2 Unbiased & biased estimates of µ & σ 2 What is the global optimum of Gauss distribution ML? Exponential family Example 2: Normal Distribution: known covariance matrix Σ and unknown mean µ 9 / 88